Summers are meant to be relaxing, go around and explore the sunny east coast, but this summer wasn’t one for me because I took a life changing decision – Yes, I accepted a new position with Crane Worldwide Logistics as a BI Architect and moved to Houston, Texas.

Why did I accept a new position?

Well, I was working with my prior Employer and Client for about 4 years and I felt I have reached a saturation point in terms of job challenge and growth. I have seen my skill set grow from a technical to Business & Analytical person and the credit goes to my previous Boss (Jason C) who pushed me to learn beyond my boundaries. When this offer came, my first instincts were this job would be a great opportunity to implement all my learning. This move was one of the toughest decision I had to take so far in my life – I did think through a lot to leave an Employer like client and a Mentor like boss and a friendly Employer, I am grateful to them both.

What’s Crane Worldwide?

Crane Worldwide is a Full-service air, ocean, customs brokerage and logistics company, it has been into business for last 8 years and have achieved significant growth in terms of revenue and resource. The company is now in its tipping point and business intelligence is one of the key factor they are investing at.

How’s new job?

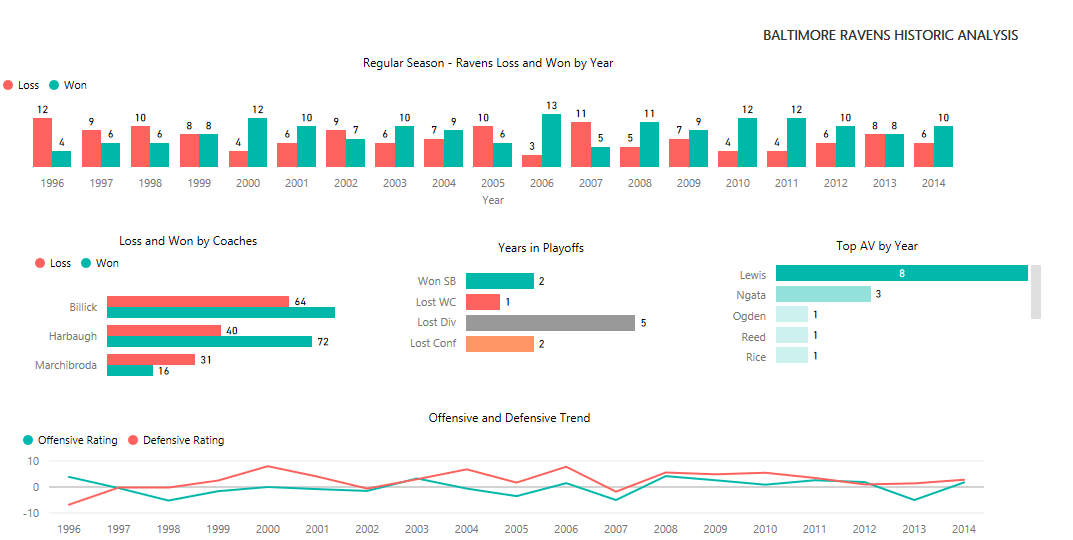

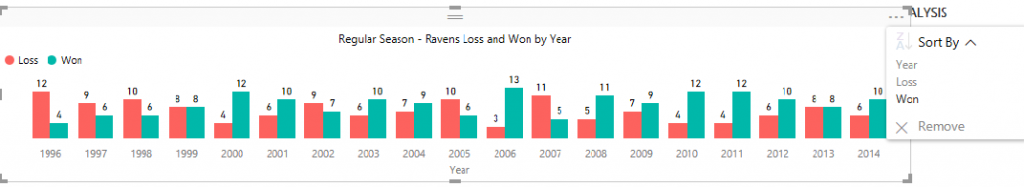

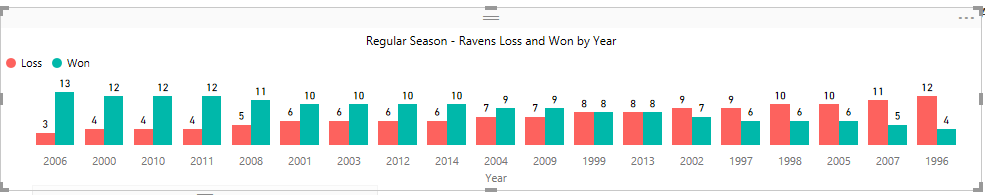

It’s been 3 months and it was super-fast and challenging, it’s a new industry to me and there was a lot of learning and have still covered only a drop in the ocean of logistics. I was very much intrigued by the startup like work culture and the responsibility they trust you with. I was able to carry out my first upgrade deployment and to come up with an execution strategy and vision. A part of my job duty is to evangelize the BI initiative through training’s to have all the users on-board with us, I do see a lot of momentum for BI and am looking forward to keep up and move forward!

How’s Houston?

Houston is hot, humid and always reminds me of my hometown Chennai, it’s a large and busy city where you get to spend a considerable time on road, though you like it or not:). We are slowly getting accommodated to the new city and it’s extremely different when compared to Baltimore and its cold climate, will surely miss/escape the snowy days and now I can never miss/escape the rainy days:( I miss all the good hearts I met at Baltimore and I hope all my friends and colleagues reading this blog are fine,Take care:)

-Junaith Haja